Deploying a Highly Available Content Delivery Service: CDS 2.x

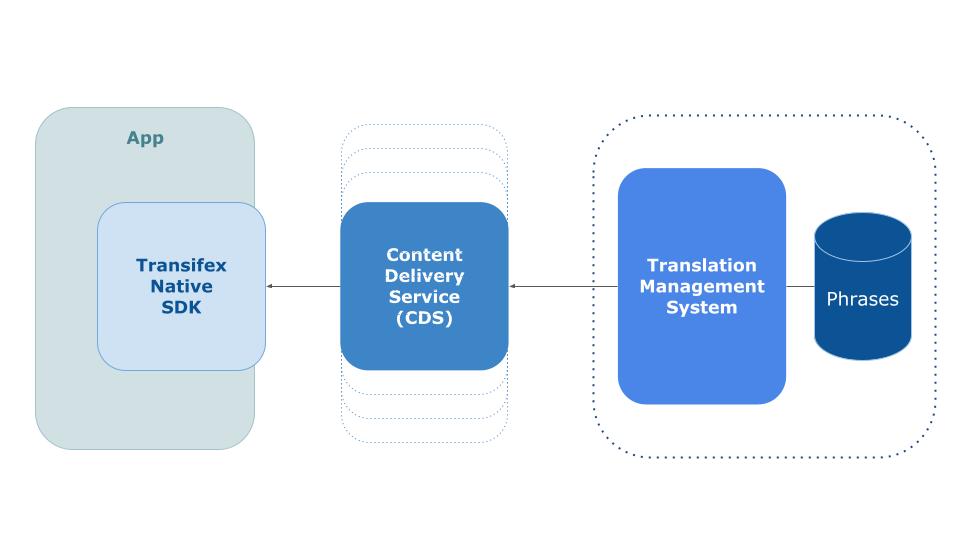

Transifex Native is a technology that enables super easy app localization by taking away the cumbersome process of managing translations through files. It’s a modern replacement for “traditional” code translation via over-the-air, cloud-based localization.

Transifex Native consists of three major components all working seamlessly together to enable a wonderful developer experience:

– Transifex Native SDK: A localization library to embed into the code itself, responsible for the i18n part of the flow.

– Translation Management System: The platform where localization managers and translators work to translate the application phrases into multiple languages.

– Content Delivery Service (CDS): A lightweight service standing between the SDK and the Translation Management System, responsible for super-fast, over-the-air delivery of content to the app and eventually to the end-user.

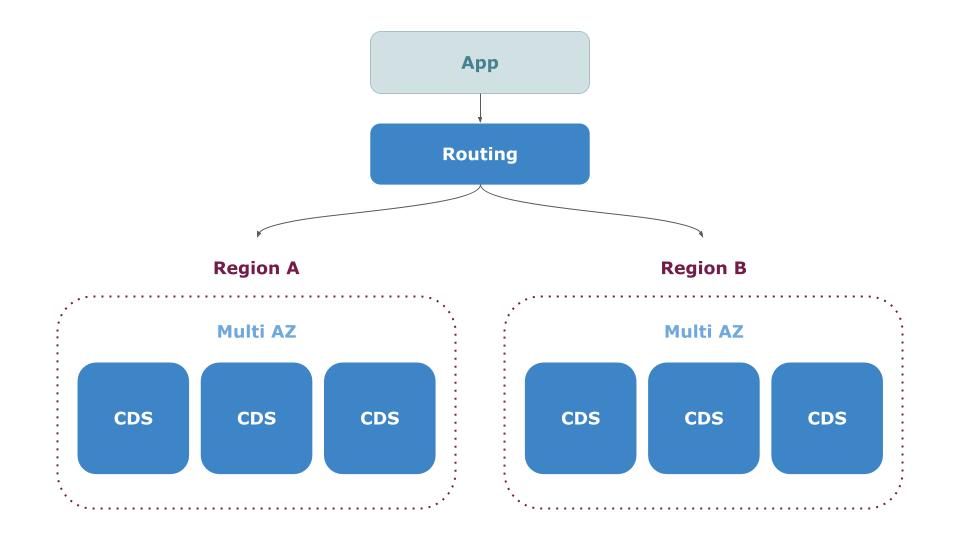

Developers have the option to either use the Transifex hosted CDS (operating on AWS) to deliver content to their users or self-host their own CDS for full control. With CDS 2.x, we managed to support multi-region AWS deployments and enable High Availability setups of the service to mitigate data center incidents.

Let’s face it. All cloud providers experience downtime. But we are aiming for a minimum 99.99% uptime. With version 1.x, we were able to deploy CDS across multiple availability zones within the same data center.

With 2.x, we (and you) can seamlessly deploy to both multiple availability zones AND multiple regions.

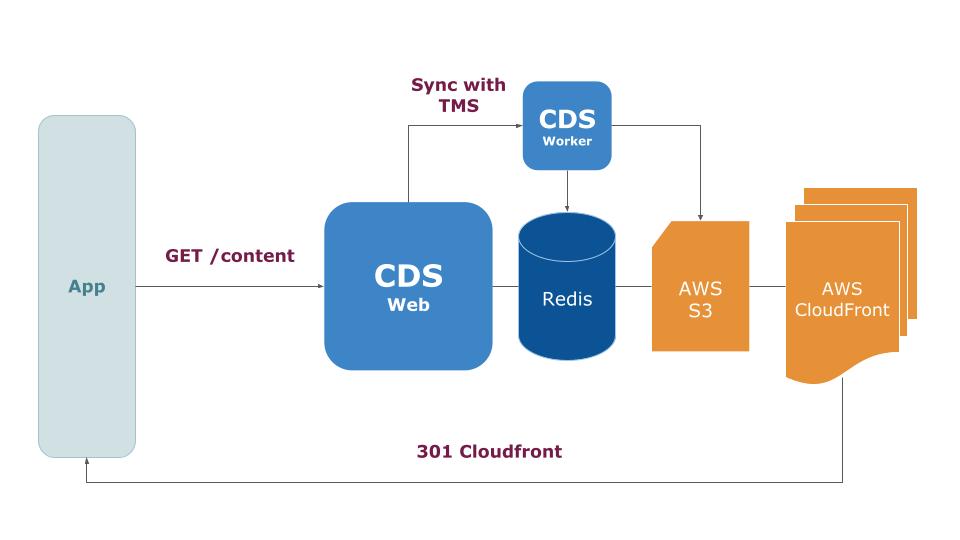

How CDS Works

Before we deep dive into how multi-region deployments work, let’s take a brief look at the basic CDS functionalities and how the various components work for the Transifex hosted version.

Let’s say that we have an app in English localized into the Spanish language. When the app switches to the Spanish language, Transifex Native SDK is making a request to a CDS endpoint to request the phrases in Spanish. The request includes:

– A public token

– A target locale (e.g., “es”)

CDS receives the request and performs the following steps:

– Do a lookup in a registry service (implemented in Redis) and check if the Spanish content is cached in the CDS.

– If content is cached, then return a 301 redirect to an AWS Cloudfront URL, which the client can use to download the actual translated phrases by utilizing the Global AWS network.

– If content is not cached, a background job is triggered to populate the cache and return a job-id. Thus, the client can retry the request until the content is ready. As soon as the worker fetches the content from the Transifex Translation Management System, it is stored in an AWS S3 bucket, forwarded to AWS Cloudfront, and finally, a record is stored in the Redis registry, such as:

"token:locale": "https://cloudfront-url/locale.json"Even though multiple availability zone deployments can ensure uptime for CDS web and worker pods, it does not guarantee high availability for the Redis instance. In other words, if Redis experiences a downtime, then CDS goes down as well.

To deploy into multiple regions, we need to enable active-active replication on the Redis instance, available as an offering in Redis Enterprise only. Moreover, using Redis as a primary database for CDS could eventually escalate to requiring a larger and larger Redis instance over time to cover increased needs for memory as more data pours in.

Hello DynamoDB

AWS DynamoDB seemed like a viable alternative to replace Redis as a storage engine. It is pretty easy to set up, and it offers support for Global tables with automatic active-active replication between multiple regions.

With CDS 2.x, we abstract the registry-related code and introduce “registry strategies”. This way, CDS can be configured to use alternative solutions for its registry engine by changing a simple config value.

With a brand new “dynamodb” strategy, we can emulate the Redis behavior over a DynamoDB Global table that automatically replicates records between two or more regions. With this setup in place, we can deploy CDS into different AWS regions. That, in turn, gives us the flexibility to add more in the future, depending on our needs.

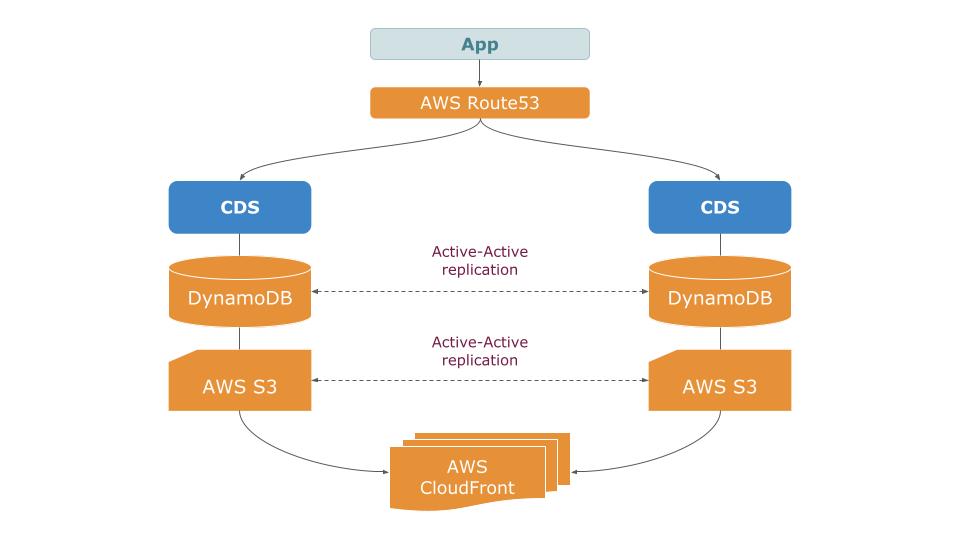

So, the new setup works like this:

– Create region-specific DynamoDB tables

– Create region-specific S3 buckets

– Enable DynamoDB active-active replication using DynamoDB Global tables

– Enable AWS S3 active-active replication

– Set AWS Cloudfront to read from multiple S3 buckets

The new setup works great, but it was not quite there yet. Doing performance testing over the new solution shows the ugly truth. It is hard to beat Redis’ performance. Stress testing CDS with 5 Kubernetes Pods shows the following throughput results:

– CDS with Redis: ~600 requests/sec

– CDS with DynamoDB: ~100 requests/sec

Ensuring premium performance on the CDS endpoints has always been goal number one, since the initial design of the service. This shows that we are not quite there yet, and we certainly are not ready to make this kind of compromise.

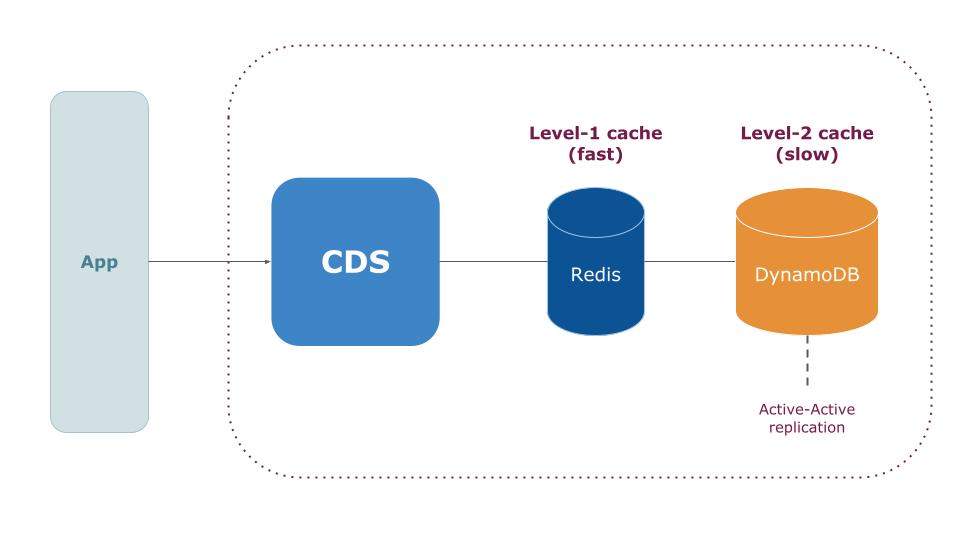

Multi-Layer Caching to the Rescue

So, what if we combine the power of both technologies? Use DynamoDB Global table as a reliable, scalable, and cross-region solution for syncing data, but at the same time utilize the speed of Redis on the region-specific deployments?

Following this path, we added a new “dynamodb-redis” strategy that works as level-1 and level-2 caching. So, when a request is made to the CDS, the flow is modified as follows:

– Is the key in the local Redis? (Level-1 cache)

– If yes, then return the value and mark the Redis key for invalidation.

– If not, then check DynamoDB (Level-2 cache), return the value, and set the local Redis (Level-1 cache) for future lookups.

– Periodically check if invalidated Redis keys are still fresh by checking their DynamoBD value in the background.

With this strategy in place, we can see an increase in throughput. Updated benchmarks show the following results:

– CDS with Redis: ~600 requests/sec

– CDS with DynamoDB: ~100 requests/sec

– CDS with DynamoDB-Redis: ~500 requests/sec

Not bad right?

Routing Requests

With all the ingredients in place, and CDS working great in multiple regions, there is one last step to be done. Ensure transparent high availability and zero downtime for the end users.

The goal is to make sure that all requests to the CDS endpoint are routed to the appropriate region, based on their availability. So, for example, if the eu-west region is down, serve all traffic from eu-north or any other available region in the mix.

We made this happen using AWS Route53 and setting some advanced routing options. By default, we set all traffic to be split evenly between all the available regions. A health check over the region-specific CDS instances ensures that if a region is down, its status is set to “unhealthy,” and all requests are routed to the “healthy” regions until it becomes “healthy” again.

Let’s get Technical

So far, we have explored how the new design works. Now let’s see how we can deploy a similar setup in our own AWS infrastructure by following some simple steps.

It will be taken for granted that you are already familiar with the required steps to enable NodeJS AWS authentication by following the official AWS guide, and you are comfortable with deploying apps via Kubernetes, Elastic Beanstalk, or in plain EC2 by using the official CDS docker image.

The approach is to deploy CDS in its simplest form and gradually enable new infrastructure. This way, we can ensure that what we have in place is working before moving on into more complex setups, including DynamoDB.

CDS With Redis

The minimum requirement to have a CDS up and running is to provision a Redis instance in place. With a Redis host up and running, you can set the following environment variable, so that CDS can locate it and use it to store data:

TX__REDIS__HOST="redis://tx-cds-redis-url:port"Then, we can test that everything works using the following curl command:

curl -X GET -H "Authorization: Bearer 1/066926bd75f0d9e52fce00c2208ac791ca0cd2c1" https://tx-cds-host/content/en -v -LContent Delivery Service With S3

With the previous example, content is fetched from Transifex and stored in Redis itself. Let’s add AWS S3 to the mix, so that we can scale up and use AWS Cloudfront as a global distributor in the next step.

Provision an S3 bucket and enable the following access policies for the app:

TX__SETTINGS__CACHE=s3

TX__CACHE__S3__BUCKET=my-cds-bucket

TX__CACHE__S3__ACL="public-read"

TX__CACHE__S3__LOCATION="https://my-cds-bucket-url/"Then, set the following environment variables to instruct CDS to use S3 and redeploy the app.

TX__SETTINGS__CACHE=s3

TX__CACHE__S3__BUCKET=my-cds-bucket

TX__CACHE__S3__ACL="public-read"

TX__CACHE__S3__LOCATION="https://my-cds-bucket-url/"Test again with the previous curl command and verify that content is returned in the response.

Content Delivery Service with Cloudfront

Provision an AWS Cloudfront service and connect it with the S3 bucket. Then, update the following environment variable, to instruct CDS to use Cloudfront instead of S3:

TX__CACHE__S3__LOCATION="https://my-cloudfront-url/"Again, use curl to verify that everything works and that content is served through Cloudfront to the client.

Content Delivery Service with DynamoDB

Now we are ready for the final step. Enable AWS DynamoDB, so that we can set up multi-region deployments.

We can use the AWS CLI or UI to provision a DynamoDB table and set up the required schema. For example:

$ aws dynamodb create-table \

--table-name transifex-delivery \

--attribute-definitions AttributeName=key,AttributeType=S \

--key-schema AttributeName=key,KeyType=HASH \

--provisioned-throughput ReadCapacityUnits=5,WriteCapacityUnits=5

and enable TTL on “ttl” attribute:

$ aws dynamodb update-time-to-live \

--table-name transifex-delivery \

--time-to-live-specification Enabled=true,AttributeName=ttlThe app should have the following permissions enabled over the DynamoDB table:

dynamodb:DescribeTable

dynamodb:DescribeTimeToLive

dynamodb:UpdateTimeToLive

dynamodb:DescribeLimits

dynamodb:BatchGetItem

dynamodb:BatchWriteItem

dynamodb:DeleteItem

dynamodb:GetItem

dynamodb:GetRecords

dynamodb:PutItem

dynamodb:Query

dynamodb:UpdateItem

dynamodb:ScanAfter the table is created and access is set, we should update the following environment variables to instruct CDS to use our DynamoDB table:

TX__SETTINGS__REGISTRY=dynamodb-redis

TX__DYNAMODB__TABLE_NAME=transifex-deliveryAgain, using curl, we should verify that everything works.

Multi-Region Content Delivery Service

With this setup in place, we can now focus on the DevOps side, using the same setup in another region. After we verify that everything works on the second region, we can enable active-active replication on the S3 buckets and DynamoDB tables (using a global table), then update Cloudfront to read from both S3 buckets.

The last step involves configuring AWS Route53, with the routing rules of your choice, in order to route traffic based on a 50-50 split, geolocation, or any other options, depending on your needs.

Wrapping Up

We are so excited with how Transifex Native continues to evolve. We strongly believe that the future of localization lies on the cloud. CDS is an open-source NodeJS application, and everybody is welcomed to contribute with their ideas.

Related posts

Android Localization: An Advanced Guide for Transifex Native

React Localization With Transifex Native: All you Need to Know